Deployment

The last step is to deploy the model, which takes a trained model and generates the source code to run on the target embedded device. There are two different flows to deploy the generated model on the embedded device:

-

The MTB-ML flow that uses the Imagimob generated model passed through the MTB-ML tooling to get the C implementation

-

The Imagimob flow that uses the Imagimob C implementation of the generated model

Each deployment option has a corresponding code example for quick deployment and learning.

When generating the IMAGIMOB Studio source, three functions are provided to interact with the pre-processor or combined pre-processor and model.

The table below lists the functions to interact with pre-processor or combined pre-processor and model.

| Function | Pre-processor (MTB-ML flow) | Pre-processor + Model (Imagimob Flow) |

|---|---|---|

| [C_Prefix]_init | Initializes the pre-processor | Initializes the pre-processor and model |

| [C_Prefix]_enqueue | Feeds the pre-processor data | Feeds the pre-processor and model data |

| [C_Prefix]_dequeue | Returns a buffer with the pre-processed data, this is then fed to the MTB-ML generated source to get an inference | Returns a model with the confidence of each classification |

For more information see Imagimob’s API documentation (opens in a new tab).

If you want to deploy the model using the Imagimob flow, skip to Deploy model with Imagimob ML flow.

Deploy model with ModusToolbox™ ML flow

To deploy with the ModusToolbox™ ML flow, the Deploy with MTB-ML code example is used. See the README.md (opens in a new tab) file from the code example to download, configure the example, and program the device.

The code example requires both the generated .h5 model file as well as the Imagimob generated model.c/.h (pre-processor only) files.

To generate the files required for deployment:

-

Download the model files as seen in Model training and results.

-

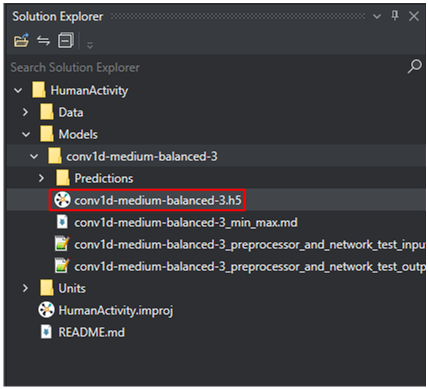

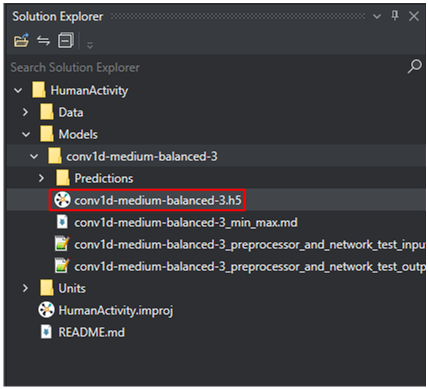

Double-click the *.h5 model in the project in the directory Models/(model name)

-

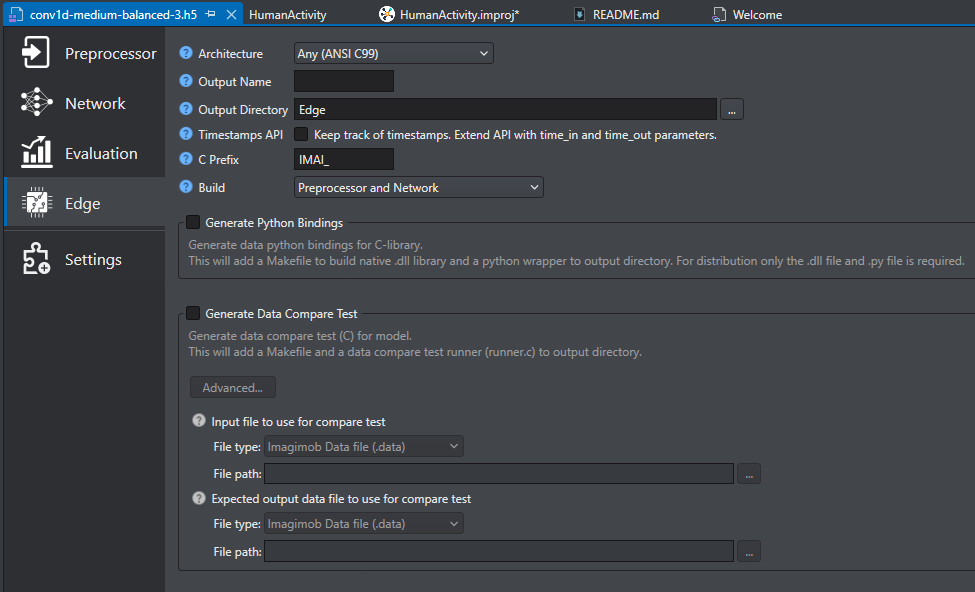

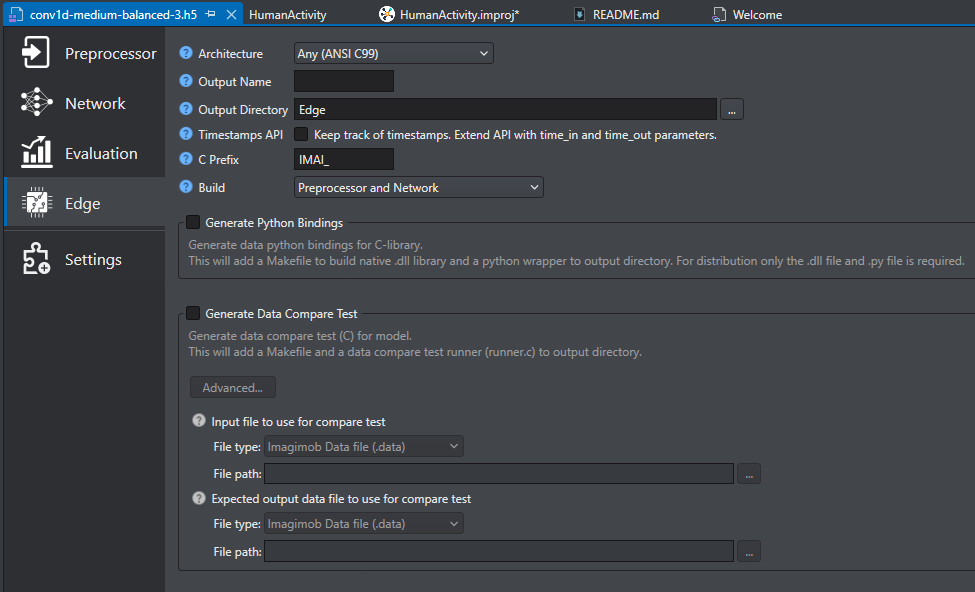

This will bring up a new page where the Preprocessor, Network, Evaluation, and Edge tabs can be accessed. Select the Edge tab to bring up the options.

The Edge tab allows users to generate output that can then be used on an embedded device.

-

Configure the build edge parameters in the generated models to work with the code example. The parameters are listed in the table below:

Build Edge parameters IMU based PDM based Description Output Name imu_model pdm_model The preprocessor is generated as a .c/h file with the names defined here C Prefix IMAI_IMU_ IMAI_PDM_ Defines the prefix for the three functions required to use the preprocessor (init, enqueue, dequeue) Build Preprocessor Preprocessor Only the pre-processor is generated in the .c/.h files -

Select Build Edge to generate the source, an Edge Generation Report comes up, select OK.

-

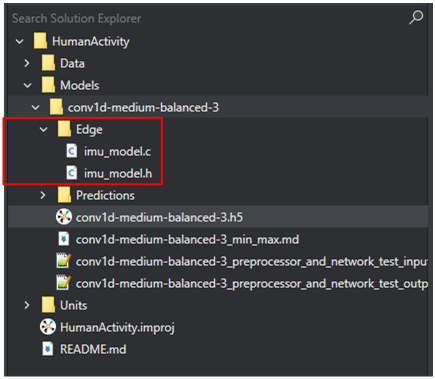

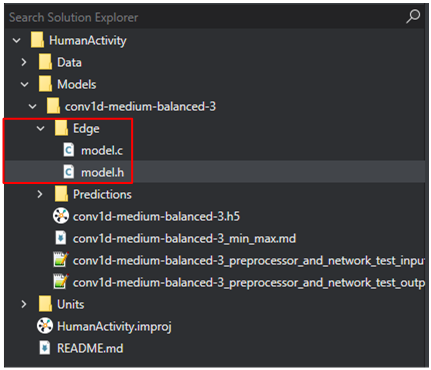

The pre-processing .c/h files are generated under Models/(model name)/Edge folder.

-

Copy the files as shown in the table below from the IMAGIMOB Studio project into the mtb-example-ml-imagimob-MTB-ML-deploy (opens in a new tab) project.

Files From IMAGIMOB Studio To mtb-example-ml-imagimob-MTB-ML-deploy (imu/pdm)_model.c/.h Models/(model_name)/Edge Edge/IMU or Edge/PDM (model_name).h5 Models/(model_name) Edge/IMU or Edge/PDM -

The generated source for the .h5 file can now be generated using the ML configurator.

-

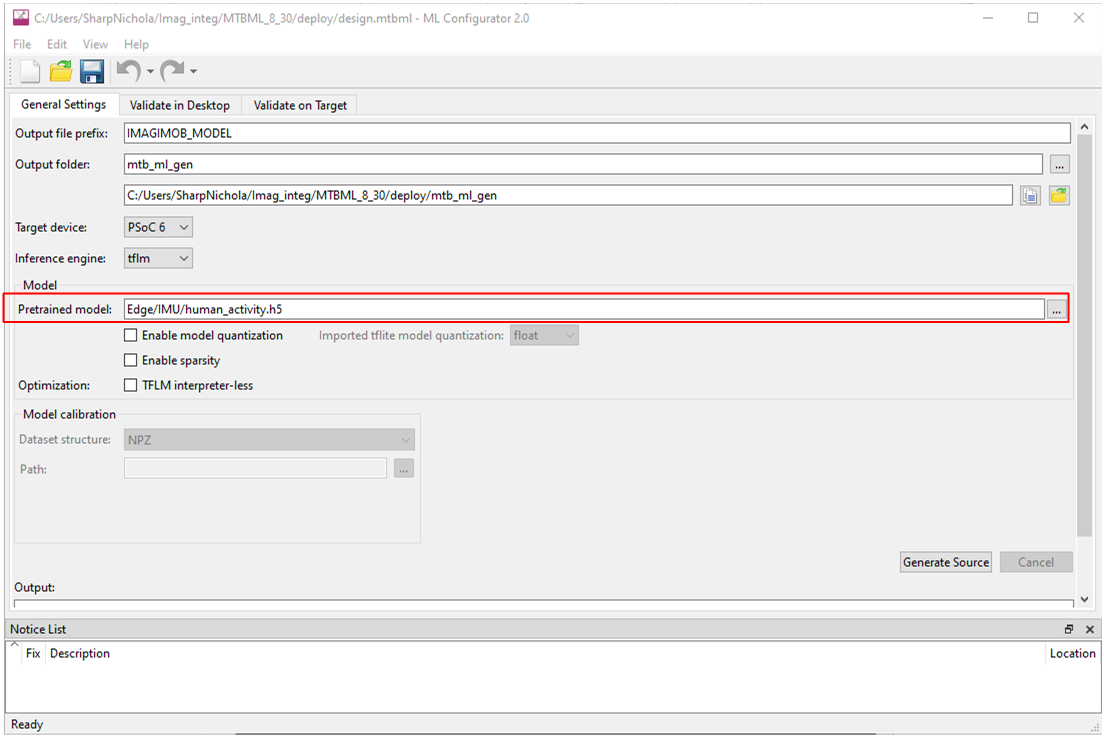

Open the ML Configurator in the eclipse quick panel or run 'make ml-configurator' in the command console and point the Pretrained model input field to the model that was copied into the ModusToolbox™ project.

-

Select Generate Source to generate model files in the mtb_ml_gen folder in the ModusToolbox™ project.

-

To configure the application to run an IMU based model, in the ModusToolbox™ project open *source/config.*h and set INFERENCE_MODE_SELECT = IMU_INFERENCE. To configure the application to run a PDM based model, set INFERENCE_MODE_SELECT = PDM_INFERENCE.

-

Program the device with the configured application.

-

Open a terminal program and select the KitProg3 COM port. Set the serial port parameters to 8N1 and 115200 baud.

-

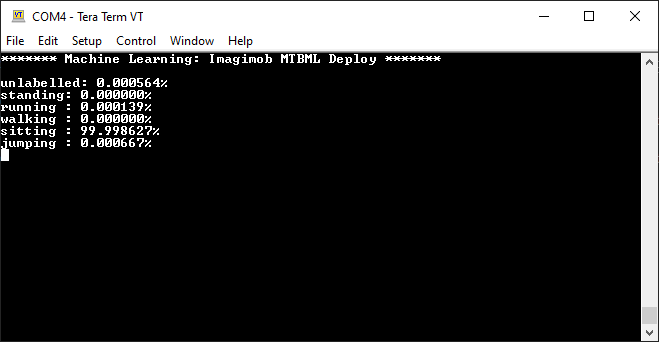

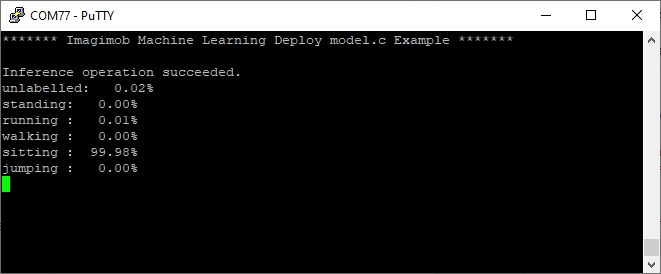

The terminal displays the inferencing result . The example uses Human Activity Recognition model.

-

If the Human Activity Recognition model was used, then perform one of the following activities standing, running, walking, sitting, or jumping. Use the figures in the Code example is configured for IMU data collection to determine how the device should be held. Watch the confidence change for each classification as an action is completed.

If using the Baby Crying detection model, play baby crying sounds and watch the confidence change for baby crying as the audio is played.

For more information see the code example README.md (opens in a new tab).

Deploy model with Imagimob ML flow

To deploy with the Imagimob flow, the Deploy with Imagimob code example is used. See the README.md (opens in a new tab) file from the code example to download, configure the example, and program the device. This code example only supports models generated with IMU data. For PDM based models, see Deploy model with ModusToolbox™ ML flow.

The code example requires the Imagimob generated model.c/.h (pre-processor + model).

To generate the files required for deployment:

-

Download the model files as seen in Model training and results.

-

Double-click the *.h5 model in the project under Models/(model name).

-

This will bring up a new page where the Preprocessor, Network, Evaluation, and Edge tabs can be accessed. Select the Edge tab to bring up the options shown in Figure 46.

The Edge tab allows users to generate output that can then be used on an embedded device.

-

To configure the generated models to work with the code example the following options needs to be configured:

Build edge parameters IMU based Description Output Name model The preprocessor is generated as a .c/h file with the names defined here C Prefix IMAI_ Defines the prefix for the three functions required to use the preprocessor (init, enqueue, dequeue) Build Preprocessor and Network Only the pre-processor is generated in the .c/.h files -

Select Build Edge to generate the source, an Edge Generation Report comes up, select OK.

-

The model .c/h files are generated under Models/(model name)/Edge folder.

-

Copy the model.c/h files from the Models/(model name)/Edge folder in the Imagimob project to the Edge folder in the mtb-example-ml-imagimob-deploy project.

-

Program the device with the configured application.

-

Open a terminal program and select the KitProg3 COM port. Set the serial port parameters to 8N1 and 115200 baud.

-

The terminal will display the inferencing result. The example uses Human Activity Recognition model.

-

If the Human Activity Recognition model was used, then perform one of the following activities standing, running, walking, sitting, or jumping. Use the figures in the Code example is configured for IMU data collection to determine how the device should be held. Watch the confidence change for each classification as an action is completed.

If using the Baby Crying detection model, play baby crying sounds and watch the confidence change for baby crying as the audio is played.

In this tutorial, you learned how to use the Infineon PSoC™ 6, ModusToolbox™ machine learning solution and Imagimob to solve machine learning problems. If you want to learn more about the solution and stay on top of any latest information and releases, visit: (https://www.infineon.com/cms/en/design-support/tools/sdk/modustoolbox-software/modustoolbox-machine-learning (opens in a new tab))

For the complete list of machine learning code examples available, see the Infineon GitHub repository (opens in a new tab).